How Do You Feel About Companies With Personal Data

It’s been an exciting week for those of us interested in what companies do with people’s data. The revelation that Cambridge Analytica got its hands on 50 million people’s Facebook data and that Facebook, at least until 2015, made this possible, enabling apps to access not only user data but also that of their friends, has thrown issues that some of us have been researching, teaching, and talking about for a number of years into the public eye.

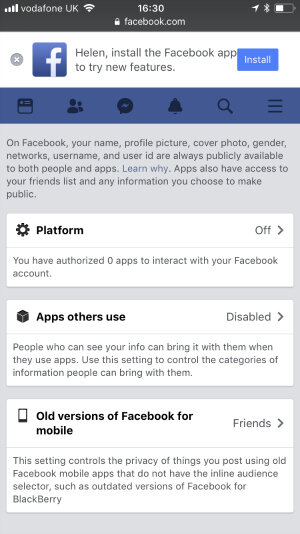

A media storm has erupted in the past few days which has seen widespread speculation about who we should blame. Should users know what they are signing up to and sharing? In other words, should they know better? Facebook certainly thinks that its users have some control over such matters (Zuckerberg’s belated and non-apologetic apology for breaching the trust of its users aside), as can be seen in the claim on one of its data policy pages that “we give you the power to share.”

Or, has Facebook done wrong in establishing the infrastructure to make access to detailed personal data possible? And on a spectrum from “making the world more open and connected” to enabling-new-forms-of-opaque-ubiquitous-and-profitable-surveillance where would each of us situate the company? Facebook itself claims to have acted appropriately, changing the terms of its API in 2014 to make the kind of data collection that led to the Cambridge Analytica case no longer possible, and asking Cambridge Analytica and other relevant parties to destroy the data acquired through means which breach Facebook’s policies.

Or, has Facebook done wrong in establishing the infrastructure to make access to detailed personal data possible? And on a spectrum from “making the world more open and connected” to enabling-new-forms-of-opaque-ubiquitous-and-profitable-surveillance where would each of us situate the company? Facebook itself claims to have acted appropriately, changing the terms of its API in 2014 to make the kind of data collection that led to the Cambridge Analytica case no longer possible, and asking Cambridge Analytica and other relevant parties to destroy the data acquired through means which breach Facebook’s policies.

Alternatively, should Cambridge Analytica and companies like it not use the data they are able to access in the ways that they do? Negative and targeted political campaigning is not new, even if such practices are less transparent in an age of big data. Should Aleksandr Kogan, the Cambridge academic who developed the app thisisyourdigitallife which was used to harvest the data, have acted differently, not passing the data on to Cambridge Analytica, or not being so naïve about what it might do with it? Should university research ethics committees update themselves and better understand how digital networks are transforming social research and related ethical questions? Should wider-scale regulation change, and change fast, to prohibit a future scenario like this one? Commentary abounds, but consensus does not.

With attention focused on a handful of key players, there are a few elephants in the room. The first is the answer to the question: why now? It’s not only social media researchers who have known for some time that platform data is open to uses and abuses in this way. In 2015, The Guardian ran a story about Cambridge Analytica’s work for Republican campaigns – this is how Facebook first discovered that Kogan had shared data with the company, according to a recent Guardian feature. What’s more, trust in Facebook has been declining for some time, as public awareness that something is happening to what we share on social media seems to be growing. As a consequence, platforms’ – not only Facebook’s – opacity-by-design approach to informing their users about what they do with our personal data is now hitting them where it hurts. Facebook videos popping up in users’ timelines, showing things like how its advertising works, what it does with user data and how to stay safe on the platform and which precede the current embarrassment, could be seen as a response to the company’s own sense of decreasing user trust and a subsequent attempt to win it back.

But the biggest absence in all of this, for me, is one common to debates about widespread uses of digital data and their alleged negative and positive effects – that is, paying attention to what people feel about all of this. A few years ago, the Berliner Gazette claimed that 75% of data that is mined and analysed is a by-product of people’s everyday activities (communication, health and fitness, relationships, transport and mobility, democratic participation, leisure and consumption, to name just a few examples). Despite the importance of such everyday practices in the machine of data mining and analytics, little attention has been paid to the people who undertake them – that is, to ordinary, non-expert folks’ thoughts and feelings about how their data are used, shared, and acted upon. This is as true in data practice and data policymaking as it is in academic research into the growth of big and small data. There have been a few surveys on public attitudes, but they overwhelmingly focus on views about single issues, like privacy or surveillance. If we are to truly understand the current data phenomenon, what we need instead, in my view, is much more qualitative understanding of how different people experience, negotiate, trust, distrust, or resist big data and data mining. (Some researchers have begun this endeavour, such as Nick Couldry and others in their Storycircle project, Virginia Eubanks and Seeta Peña Gangadharan’s Our Data Bodies initiative and Veronica Barassi’s Child Data Citizen. And me, with colleagues, on Seeing Data.)

Understanding what people feel about what companies do with their data is as important as understanding what they know, because hopes, fears, misconceptions, and aspirations play an important role in shaping attitudes to data mining. The emotional dimensions of having to live with more and more data have rarely been noted or discussed, in part because studies of data in society have not attended to ordinary data experiences. Yet emotions play an important role in all aspects of civic and democratic life. As such, they need to be taken seriously in relation to data mining and analytics, as central aspects of experience and as informing and informed by reason and rational thinking.

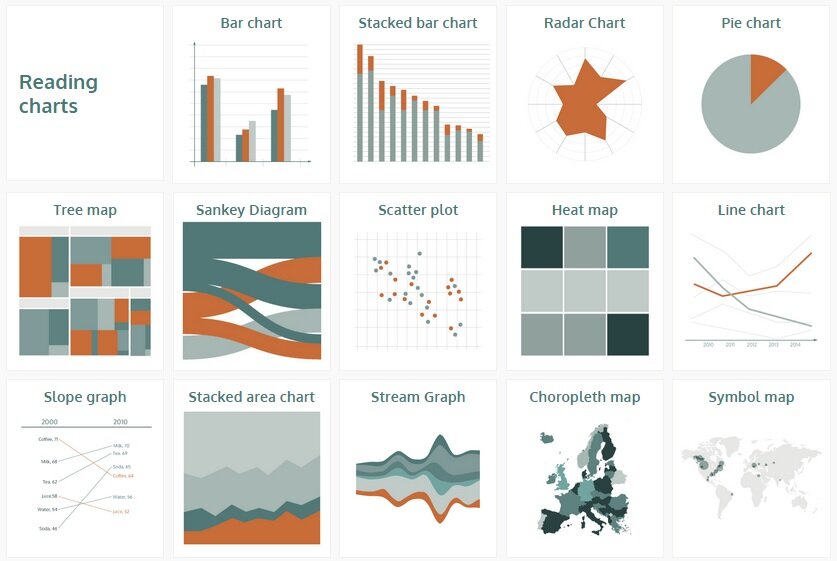

In my own research, I’ve witnessed the important role emotions play in everyday experiences of living with data. For example, in research with a range of actors engaged in social media data mining, in city councils, museums, and the analytics companies they work with, I noticed how much people desire numbers, whether it was participants themselves, the clients they work for, or their colleagues. The emotions that came to the surface in this research seemed troubling, but in other research which explored how people engage with visual representations of data, my co-researchers and I found that a broader range of emotions emerged, including pleasure, anger, sadness, guilt, shame, relief, worry, love, empathy, excitement, offence.

What conclusions can we draw from these findings? A few months ago, a Guardian journalist exercised her data rights by asking the dating platform Tinder to give her the data it held about her – 800 pages of Tinder activity, Facebook likes, Instagram photos, locations, jobs, interests, music tastes, and more. In an article about her experience, a digital technology sociologist is quoted as saying: “apps such as Tinder are taking advantage of a simple emotional phenomenon; we can’t feel data”. Going back a little further, there was much discussion about Donald Trump’s claim that his inauguration attracted the largest inauguration audience in history. In an article in Scientific American outlining how to respond to the crisis in knowledge that Trump’s claims represent, “How to Convince Someone When Facts Fail,” one piece of advice about how to deal with such situations was to “keep emotions out of the exchange.”

Both of these examples suggest that emotions and data are separate. I’m proposing that they’re not – they are intricately interwoven in people’s experiences of data in everyday life. Given their significance, we need much more understanding of how people feel, as well as what they know, about what happens to their data. Arguably, the absence of such understanding has played a role in getting us into the global mess we are now in. After all, in Channel 4’s undercover investigation into the Cambridge Analytica case, an employee is quoted as saying “there’s no good fighting an election campaign on the facts, because actually it’s all about emotions”. They should know.

With the enforcement of the EU’s General Data Protection Regulation around the corner, now is a good time to pay attention to people’s feelings about the mining of their data. This is not in order for companies to continue to profit from our intimate information in ways that we find acceptable. Rather, it is so that data mining, which surely is not going away, can be undertaken in ways which are attentive to people’s views, responsible, and perhaps even “good”, in ways defined and articulated by the people who data analytics affects and on whose data it relies.