Theory and tools in the age of big data

Back in February, I had the privilege of attending Social Science Foo Camp, a flexible-schedule conference hosted in part by SAGE at Facebook HQ where questions of progress in the age of Big Data were a major topic of discussion. What I found most insightful about these conversations is how using or advocating for Big Data is one thing, but making sense of it in the context of an established discipline to do science and scholarship is quite another.

What does it mean to anonymize text?

Text data are a resource that we are only beginning to understand. Many human interactions are moving to the digital world, and we become increasingly sophisticated in documenting interactions. Face-to-face encounters are replaced by written communication (e.g., WhatsApp, Twitter) and every crime incident or hospital visit is recorded. All of these interactions leave a trace in the form of text data.

Making Sense of The Knowledge Explosion with Knowledge Mapping

Bernadette Wright and Steve Wallis introduced knowledge mapping as a part of the evaluation profess.

The ethics of AI and working with data at scale: what are the experts saying

If we were to do a text mining exercise on all the incredible discussions at last week’s conference 100+ Brilliant Women in AI & Ethics, education would beat all other topics by a mile. We talked about educating kids, we had teenagers share their thoughts on AI in poems and essays, and exchanged views on the nuances of teaching ethics in computing and working with large volumes of social data both for computer scientists and experts from other disciplines.

The Tedium of Transcription: Who's Codifying the Process?

Transcribing can be a pain, and although recent progress in speech recognition software has helped, it remains a challenge. Speech recognition programs, do, however, raise ethical/consent issues: what if person-identifiable interview data is transcribed or read by someone who was not given the consent to do so? Furthermore, some conversational elements aren't transcribed well by pattern recognition programs. What, or who, is really transforming the transcription process, then? What's next for transcription?

Who’s disrupting transcription in academia?

Transcribing is a pain, recent progress in speech recognition software has helped, but it is still a challenge. Furthermore, how can you be sure that your person-identifiable interview data is not going to be listened and transcribed by someone who wasn’t on your consensus forms. The bigger disruptor is the ability to annotate video and audio files

10 organizations leading the way in ethical AI

AI is susceptible to misuse and has been found to reflect biases that exist in society. Fortunately, there are a number of organizations committed to addressing ethical questions in AI. We list our top 10.

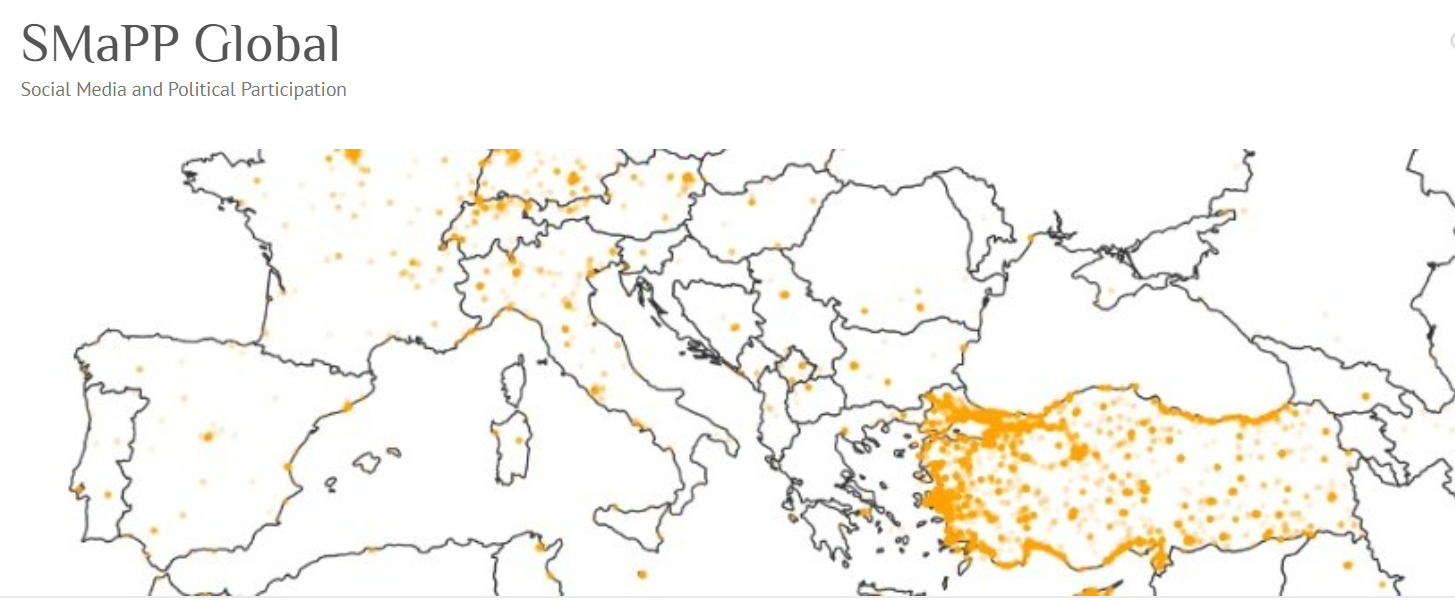

SMaPP-Global: An interview with Josh Tucker and Pablo Barbera

In April this year a special collection examining social media and politics was published in SAGE Open. Guest edited by Joshua A. Tucker and Pablo Barberá, the articles grew out of a series of conferences held by NYU’s Social Media and Political Participation lab (SMaPP) and the NYU Global Institute for Advanced Study (GIAS) known as SMaPP-Global. Upon publication Joshua Tucker said ‘the collection of articles also shows the value of exposing researchers from a variety of disciplines with similar substantive interests to each other's work at regular intervals’. Interdisciplinary collaborative research projects are a cornerstone of what makes computational social science such an interesting field. We were intrigued to know more so caught up with Josh and Pablo to hear more.

Making sensitive text data accessible for computational social science

Text is everywhere, and everything is text. More textual data than ever before are available to computational social scientists—be it in the form of digitized books, communication traces on social media platforms, or digital scientific articles. Researchers in academia and industry increasingly use text data to understand human behavior and to measure patterns in language. Techniques from natural language processing have created a fertile soil to perform these tasks and to make inferences based on text data on a large scale.

How to get a DOI for your teaching materials with Zenodo

Academics face various pressures, from research teaching and administrative duties. The best way to create a positive culture in academia is to share. However, it may sometimes feel like there is no incentive to share teaching materials, if I have spent so many hours developing this work, why should I just hand it over to someone, “what’s in it for me?”

No more tradeoffs: The era of big data content analysis has come

For centuries, being a scientist has meant learning to live with limited data. People only share so much on a survey form. Experiments don’t account for all the conditions of real world situations. Field research and interviews can only be generalized so far. Network analyses don’t tell us everything we want to know about the ties among people. And text/content/document analysis methods allow us to dive deep into a small set of documents, or they give us a shallow understanding of a larger archive. Never both. So far, the truly great scientists have had to apply many of these approaches to help us better see the world through their kaleidoscope of imperfect lenses.

Social scientists working with LinkedIn data

Today, researchers are using LinkedIn data in a variety of ways: to find and recruit participants for research and experiments (Using Facebook and LinkedIn to Recruit Nurses for an Online Survey), to analyze how the features of this network affect people’s behavior and identity or how data is used for hiring and recruiting purposes, or most often to enrich other data sources with publicly available information from selected LinkedIn profiles (Examining the Career Trajectories of Nonprofit Executive Leaders, The Tech Industry Meets Presidential Politics: Explaining the Democratic Party’s Technological Advantage in Electoral Campaigning).

Instead of seeing criticisms of AI as a threat to innovation, can we see them as a strength?

At CogX, the Festival of AI and Emergent Technology, two icons appeared over and over across the King’s Cross location. The first was the logo for the festival itself, an icon of a brain with lobes made up of wires. The second was for the 2030 UN Sustainable Development Goals (SDGs), a partner of the festival. The SDG icon is a circle split into 17 differently colored segments, each representing one of the goals for 2030—aims like zero hunger and no poverty. The idea behind this partnership was to encourage participants of CogX—speakers, presenters, expo attendees—to think about how their products and innovations could be used to help achieve these SDGs.

2018 Concept Grant winners: An interview with MiniVan

Following the launch of the SAGE Ocean initiative in February 2018, the inaugural winners of the SAGE Concept Grant program were announced in March of the same year. As we build up to this year’s winner announcement we’ve caught up with the three winners from 2018 to see what they’ve been up to and how the seed funding has helped in the development of their tools.

In this post we chatted to MiniVan, a project of the Public Data Lab.

Tapping into the hidden power of big search data

Sam Gilbert demonstrates the value of big search data for social scientists, and suggests some practical steps to using internet search data in your own research.

Social media data in research: a review of the current landscape

Social media has brought about rapid change in society, from our social interactions and complaint systems to our elections and media outlets. It is increasingly used by individuals and organizations in both the public and private sectors. Over 30% of the world’s population is on social media. We spend most of our waking hours attached to our devices, with every minute in the US, 2.1M snaps are created and 1M people are logging in to Facebook. With all this use, comes a great amount of data.

2018 Concept Grant winners: An interview with Ken Benoit from Quanteda

We catch up with Ken Benoit, who developed Quanteda, a large R package originally designed for the quantitative analysis of textual data, from which the name is derived. In 2018, Quanteda received $35,000 of seed funding as inaugural winners of the SAGE Concept Grants program. We find out what challenges Ken faced and how the funding helped in the development of the package.

2018 SAGE Concept Grant winners: An interview with the Digital DNA Toolbox team

Following the launch of the SAGE Ocean initiative in February 2018, the inaugural winners of the SAGE Concept Grant program were announced in March of the same year. As we build up to this year’s winner announcement we’ve caught up with the three winners from 2018 to see what they’ve been up to and how the seed funding has helped in the development of their tools.

In this post, we spoke with the Digital DNA Toolbox (DDNA) winners, Stefano Cresci and Maurizio Tesconi about their initial idea, the challengers they faced along the way and the future of tools for social science research.