New Approach to Research Evaluation: Evaluative Inquiry

by Tjitske Holtrop

During May 2022 SAGE Methodspace is focused on evaluation and other types of applied research. This post, originally published on LSE Impact, discusses the importance of evaluating research. The post was originally posted on LSE Impact Blog.

Academic evaluation regimes set up to quantify the quality of research, individual scholars, and institutions have been widely criticized for the detrimental effects they have on academic environments and on knowledge production itself. Max Fochler and Sarah de Rijcke recently called for a more exploratory, less standardized way of doing research evaluation, with the introduction of the concept of the evaluative inquiry. We have since put to practice their call in the context of two commissioned projects: one advising a theology department on research evaluation; and another advising a university on the self-assessment element of the Dutch evaluation protocol As one of the project members, I propose four principles to give further shape to the evaluative inquiry’s method (how), focal point (what), subject (who), and ambition (why).

Versatile methods: from representation to reconsideration

Metrics play an important role in the representation and communication of academic excellence. Many initiatives (among others, DORA and the Leiden Manifesto) argue that metrics and citation scores alone aren’t appropriate instruments to represent academic excellence. The evaluative inquiry builds on these initiatives without endorsing their, in our view, unproductive dichotomy between quantitative and qualitative ways of evaluating research. Instead, we propose versatile methods allowing choice from a range of methods such as interviews, workshops, scientometrics, and contextual response analyses. Versatile methods will help us to understand scientific environments in additional ways but, more importantly, they will offer a range of impulses that compel project partners as well as analysts to rethink established ways of evaluating academic quality.

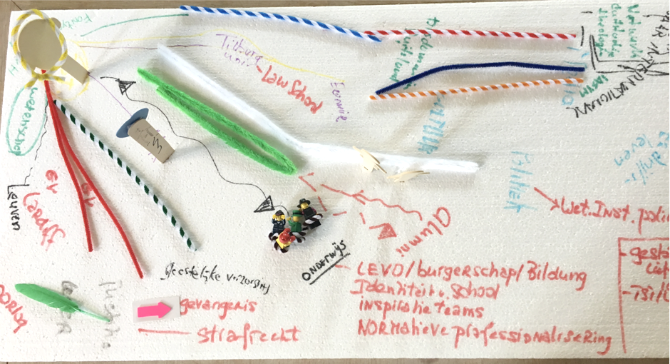

Working with the theology department, we decided to combine interviews with the less familiar method of a design thinking workshop. The workshop seemed a perfect tool by which to engage participants in unusual ways, get them to think outside the box, and gain additional insights into the workings of the department. Not all theologians valued the workshop as a meaningful exercise, however. One exercise encouraged participants to build their own theological ecosystems using pipe cleaners, paperclips, and Lego figurines. One theologian responded by frowning and walking away.

Even amongst ourselves, we didn’t immediately know what to make of our experimental methodology or what epistemological status to afford a full day of crafts, materials, and playfully invented fables and rituals. This disconcertment compelled us to reconsider some of the entrenched assumptions about the what, where, how, and who of academic quality. These conversations offer a mode of inquiry that is radically different from established evaluative protocols – quantified or not – as these conversations do not start from institutionalized parameters

Contextual focus: from individuals to building common worlds

Academic evaluations are typically constructed around the idea that academic quality is a value that can be attributed to an individual unit (a scholar, department, or institution). Adding societal relevance to the ledger of academic accounting hasn’t moved the focus away from individual excellence: publications alone aren’t enough to demonstrate excellence, one now has to prove societal relevance as well. This focus on the individual renders academic organisations and society of interest and value only to the extent that they produce individual excellence. The evaluative inquiry moves away from the individual and, instead, takes as its focal point the tangled feedback relations between academic themes and different academic and social contexts. Contextual focus, I propose, is the element of the evaluative inquiry that detects these vibrant relations and moves them centre stage.

An example is a disagreement within one group of theologians about what good science looks like. This disagreement was grounded in differing epistemic commitments coinciding with denominational loyalties, debating for example the nature of evidence for and against theism. One could say this struggle was contextual noise hindering the flourishing of academic units. Instead, we suggested to focus on the conflict as a lively debate on how to collaborate in spite of such differences. Participants in this particular debate could then be considered experts of multi-faith collaboration who could exchange knowledge with societal domains that grapple with similar issues, such as multicultural education or refugees’ integration in Dutch society. Applying this logic more broadly, individual excellence is decentralized in favor of an appreciation of the dense and lively interaction between academic themes and their multiple contexts.

Knowledge diplomacy: from standardisation to mediation

One critique of academic evaluation argues it has become a ritual of verification that has turned what is extremely political into mundane, bureaucratic, technical matters to be dealt with by experts as mere technicalities. The project with the Catholic theologians made us rethink our own roles. Catholic theologians are guided by the notion of re-actualization, which is an age-old exercise of re-articulating the meaning and form of Christianity in the face of adversity. Re-actualization struck us as a kind of self-evaluation, which made clear to us that evaluators are not the only experts of value. These insights may enrich the repertoire of the evaluator and offer lessons that may be drawn on in subsequent encounters. What was more, the temporal scope and reference to adversity within the idea of re-actualization served as a reminder of the stakes of the evaluation project.

The figure of the diplomat allows us to take the evaluation project seriously as both technical and political, both furthering our understanding of different knowledge traditions and working towards making the encounter work for all parties involved. Rather than commanding compatibility with a single register of values, as the bureaucrat does, the diplomat negotiates ways forward together, despite an apparent incongruence of worldviews or ambitions.

Accountable conversations: from reporting to ongoing engagement

Academic evaluations typically have a clear sense of boundaries; cause and effect, beginnings and ends. Following methodological protocol yields representative results, evaluators examine the evaluated subject, and the open question of academic quality is often quickly closed with a (bibliometric) report. While we recognize the academic work that goes into keeping boundaries and data stable and clean, the evaluative inquiry wants to engage with the uncertainty of evaluation work and the politics of formats, protocols, and endings.

At a recent conference Sarah de Rijcke presented our work around the evaluative inquiry and was asked how this approach could work within the hierarchies and power struggles of current academia and science policy. One answer is that even without being able to offer a definitive analysis, we firmly believe in an ongoing discussion of the fault lines between forms of value, the uncertainties embedded within academic evaluations — from precarious academic positions to the scaffolding that is needed to turn uncertain scientific claims into certain ones — and the politics of choices and cuts that are made. While strongly advocating rigorous analytical work in the field of academic evaluations, the evaluative inquiry is equally about the conversation with academics, policymakers, and others interested in academic evaluation.

Looking forward

CWTS will continue the experiment of research evaluations. These four principles around the how, what, who, and why of the evaluative inquiry will hopefully turn what might otherwise be considered liabilities in evaluation into sources of inspiration.

Author's Note: I would like to thank CWTS’s SES team, and most notably Sarah de Rijcke, Jochem Zuijderwijk, Thomas Franssen, and Anne Beaulieu, as well as Ad Prins, the theologians themselves, and Julien McHardy for their valuable contributions to the evaluative inquiry projects and this blog post.

Tips on grading and marking to make it relevant and useful for students’ future research.