Essential new content on Sage Research Methods platform – 2024 round up

Sample a selection of the most helpful methods videos and guides published in 2024 on the Sage Research Methods online platform with free access.

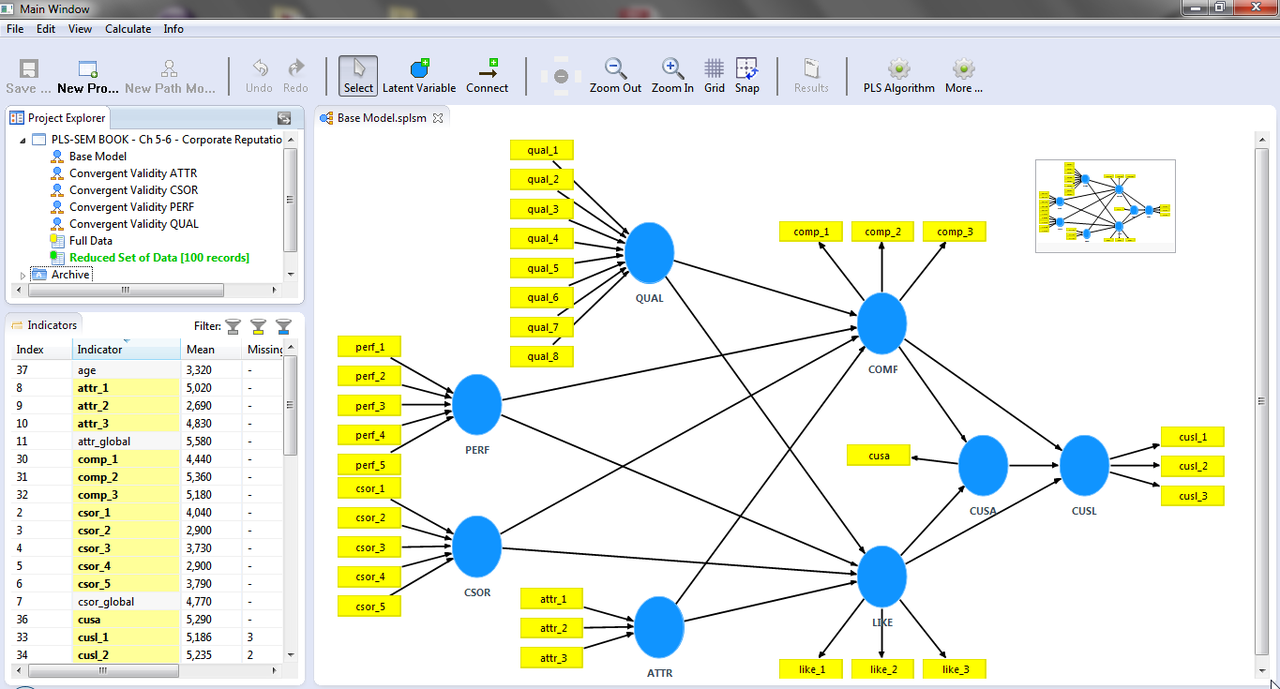

Partial Least Squares Structural Equation Modeling: An Emerging Tool in Research

Partial least squares structural equation modeling (PLS-SEM) enables researchers to model and estimate complex cause-effects relationship models

Image as data: Automated visual content analysis for social science

Images contain information absent in text, and this extra information presents opportunities and challenges. It is an opportunity because one image can document variables with which text sources (newspaper articles, speeches or legislative documents) struggle or on datasets too large to feasibly code manually. Learn how to overcome the challenges.

Quantitative Research with Nonexperimental Designs

What is the difference between experimental and non-experimental research design? We look at how non-experimental design distinguishes itself from experimental design and how it can be applied in the research process with open-access examples.

Building Quantitative Skills in Postsecondary Social Sciences Courses

Download the free report from the project, “Fostering Data Literacy: Teaching with Quantitative Data in the Social Sciences.” This report explores two key challenges to teaching with data: helping students overcome anxieties about math and synchronizing the interconnected methodological, software, and analytic competencies.

Emotion and reason in political language

In the day-to-day of political communication, politicians constantly decide how to amplify or constrain emotional expression, in service of signalling policy priorities or persuading colleagues and voters. We propose a new method for quantifying emotionality in politics using the transcribed text of politicians’ speeches. This new approach, described in more detail below, uses computational linguistics tools and can be validated against human judgments of emotionality.

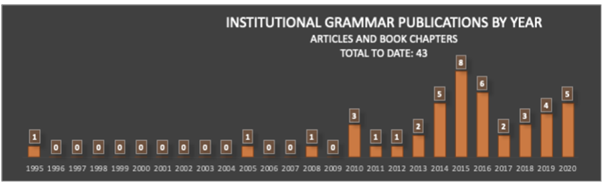

Understanding institutions in text

Institutions — rules that govern behavior — are among the most important social artifacts of society. So it should come as a great shock that we still understand them so poorly. How are institutions designed? What makes institutions work? Is there a way to systematically compare the language of different institutions? One recent advance is bringing us closer to making these questions quantitatively approachable. The Institutional Grammar (IG) 2.0 is an analytical approach, drawn directly from classic work by Nobel Laureate Elinor Ostrom, that is providing the foundation for computational representations of institutions. IG 2.0 is a formalism for translating between human-language outputs — policies, rules, laws, decisions, and the like. It defines abstract structures precisely enough to be manipulable by computer. Recent work, supported by the National Science Foundation (RCN: Coordinating and Advancing Analytical Approaches for Policy Design & GCR: Collaborative Research: Jumpstarting Successful Open-Source Software Projects With Evidence-Based Rules and Structures ), leveraging recent advances in natural language processing highlighted on this blog, is vastly accelerating the rate and quality of computational translations of written rules.

Case Study Methods and Examples

What is case study methodology? It is unique given one characteristic: case studies draw from more than one data source. In this post find definitions and a collection of multidisciplinary examples.

Experiments and Quantitative Research

Learn about experimental research designs and read open-access studies.

text: An R-package for Analyzing Human Language

In the field of artificial intelligence (AI), Transformers have revolutionized language analysis. Never before has a new technology universally improved the benchmarks of nearly all language processing tasks: e.g., general language understanding, question - answering, and Web search. The transformer method itself, which probabilistically models words in their context (i.e. “language modeling”), was introduced in 2017 and the first large-scale pre-trained general purpose transformer, BERT, was released open source from Google in 2018. Since then, BERT has been followed by a wave of new transformer models including GPT, RoBERTa, DistilBERT, XLNet, Transformer-XL, CamemBERT, XLM-RoBERTa, etc. The text package makes all of these language models and many more easily accessible to use for R-users; and includes functions optimized for human-level analyses tailored to social scientists.

Unbundling the remote quantitative methods academic: Coolest tools to support your teaching

This year’s lockdown challenged the absolute core of higher education and accelerated or rather imposed the adoption of digital tooling to fully replace the interactivity of the physical classroom. And while other industries might have suffered losses, the edtech space flourished, with funding for edtech almost doubling in the first half of 2020 vs 2019. Even before the pandemic, lecturers were starting to feel overwhelmed by the amount of choice to support their teaching. More funding just meant more hype, more tools, and more tools working on similar or slightly improved solutions, making it even harder and more time-consuming to find and adapt them in a rush.

Below, we take a look at several tools and startups that are already supporting many of you in teaching quantitative research methods; and some cool new tools you could use to enhance your classroom.

Life on the screen

As technology becomes more integral to everything we do, the time we spend in front of screens such as smartphones and computers continues to increase. The pervasiveness of screen time has raised concerns among researchers, policymakers, educators, and health care professionals about the effects of digital technology on well-being. Despite growing concerns about digital well-being, it has been a challenge for scientists to measure how we actually navigate the digital landscape through our screens. For example, it is well documented that self-reports of one’s media use are often inaccurate despite survey respondents’ best efforts. Just knowing screen time spent on individual applications does not fully capture a person’s usage of the digital device either. Some could spend an hour on YouTube watching people play video games whereas others might spend the same amount of time watching late night television talk shows to keep up to date with the news. Even though the screen time is the same for the same application, the intentions and values of consumption of certain types of content can be vastly different among users.

Gary King makes all lectures for Quantitative Social Science Methods course free online

What is the field of statistical analysis? So begins Gary King’s first online course in the Harvard Government Dept graduate methods sequence. King, the Albert J. Weatherhead III University Professor at Harvard University -- one of 25 with Harvard's most distinguished faculty title -- and Director of the Institute for Quantitative Social Science has just recorded all his lectures and made them free to access online. The videos range in length from 30 minutes to an hour and half and you can watch them all on YouTube here.

My journey into text mining

My journey into text mining started when the institute of Digital Humanities (DH) at the University of Leipzig invited students from other disciplines to take part in their introductory course. I was enrolled in a sociology degree at the time, and this component of data science was not part of the classic curriculum; however, I could explore other departments through course electives and the DH course sounded like the perfect fit.

Moving your behavioral research online

COVID-19 has affected research all over the world. With universities closing their campuses and governments issuing restrictions on social gatherings, behavioral research in the lab has ground to a halt. This situation is urgent. Ongoing studies have been disrupted and upcoming studies cannot begin until they are adapted to the new reality. At Volunteer Science, we’re helping researchers around the world navigate these changes. In this post, I’ll condense the most important recommendations we’re giving to researchers for translating their studies into an online format and recruiting virtual participants.

Devoted users: EU elections and gamification on Twitter

Our study, whose preliminary results we recently presented at the 2019 SISP (Italian Political Science Association) Conference, examines the visibility of the tweets posted by Italian political leaders during the last EU Elections campaign. We show how crowd-sourced and spontaneous political action, triggered by a social media game, can take an almost social bot-like nature and significantly boost the visibility of tweets by political leaders during a major political event.

Theory and tools in the age of big data

Back in February, I had the privilege of attending Social Science Foo Camp, a flexible-schedule conference hosted in part by SAGE at Facebook HQ where questions of progress in the age of Big Data were a major topic of discussion. What I found most insightful about these conversations is how using or advocating for Big Data is one thing, but making sense of it in the context of an established discipline to do science and scholarship is quite another.

2018 Concept Grant winners: An interview with Ken Benoit from Quanteda

We catch up with Ken Benoit, who developed Quanteda, a large R package originally designed for the quantitative analysis of textual data, from which the name is derived. In 2018, Quanteda received $35,000 of seed funding as inaugural winners of the SAGE Concept Grants program. We find out what challenges Ken faced and how the funding helped in the development of the package.

Collecting social media data for research

Human social behavior has rapidly shifted to digital media services, whether Gmail for email, Skype for phone calls, Twitter and Facebook for micro-blogging, or WhatsApp and SMS for private messaging. This digitalization of social life offers researchers an unprecedented world of data with which to study human life and social systems. However, accessing this data has become increasingly difficult.