Populations and Participants in Online Studies

By Janet Salmons, Ph.D. Manager, Sage Research Methods Community

Dr. Salmons is the author of Doing Qualitative Research Online, and Gather Your Data Online. Use the code COMMUNIT24 for 25% off through December 31, 2024 if you purchase research books from Sage.

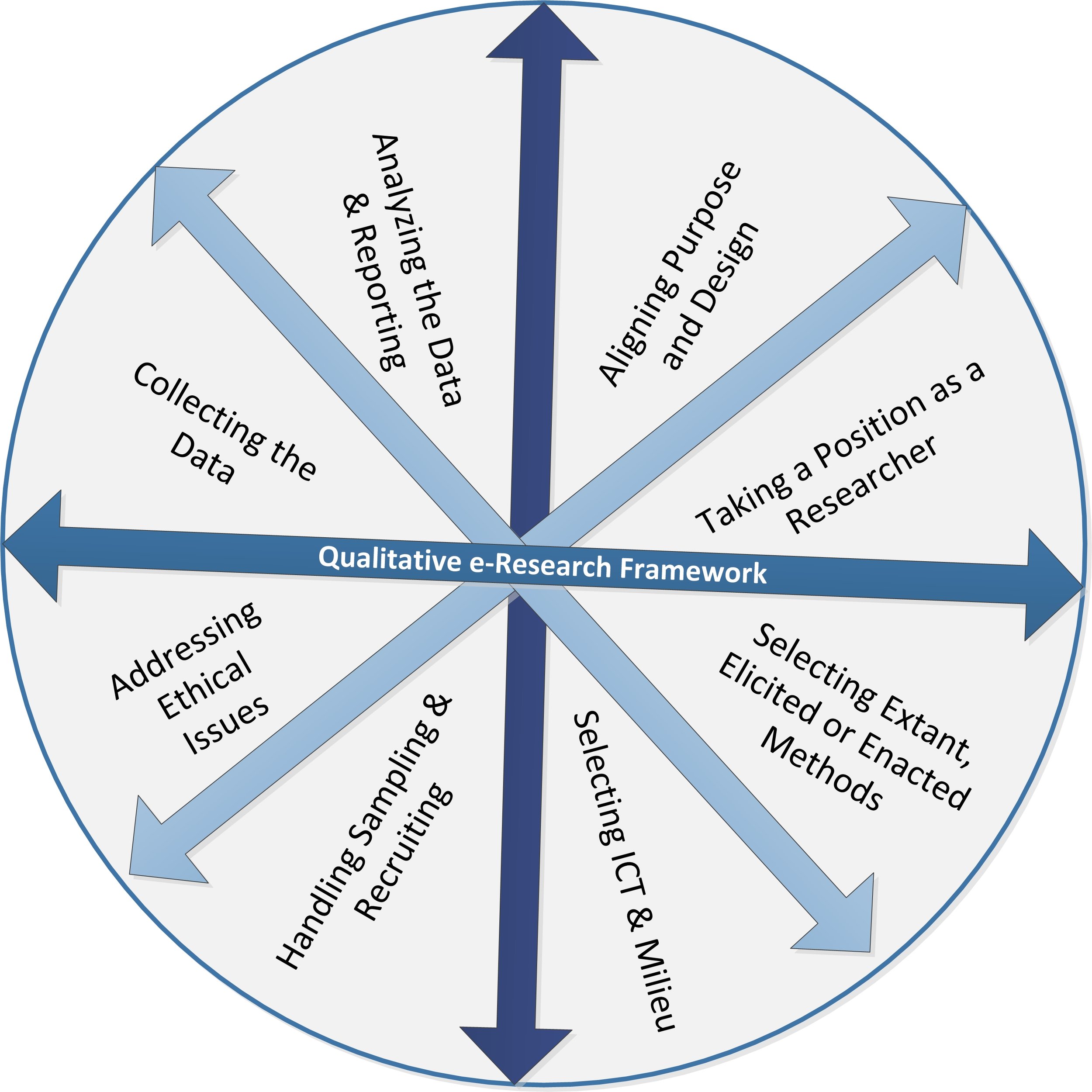

Some online researchers collect extant, or existing, data. Others rely on participants to answer interview or survey questions, be a part of experiments, or engage in creative interactions. What are some of the issues researchers face, and what are the experiences of online participants? This collection of open-access articles includes diverse examples and perspectives.

Gil-López, T., Christner, C., de León, E., Makhortykh, M., Urman, A., Maier, M., & Adam, S. (2023). Do (Not!) Track Me: Relationship Between Willingness to Participate and Sample Composition in Online Information Behavior Tracking Research. Social Science Computer Review, 41(6), 2274-2292. https://doi.org/10.1177/08944393231156634 (Open access link)

Abstract. This paper offers a critical look at the promises and drawbacks of a popular, novel data collection technique—online tracking—from the point of view of sample composition. Using data from two large-scale studies about political attitudes and information consumption behavior carried out in Germany and Switzerland, we find that the likelihood of participation in a tracking study at several critical dropout points is systematically related to the gender, age, and education of participants, with men, young, and more educated participants being less likely to dropout of the studies. Our findings also show that these patterns are incremental, as changes in sample composition accumulate over successive study stages. Political interest and ideology were also significantly related to the likelihood of participation in tracking research. The study explores some of the most common concerns associated with tracking research leading to non-participation, finding that they also differ across demographic groups. The implications of these findings are discussed.

Hannemann, M., Henn, S., & Schäfer, S. (2023). Participation in Online Research: Towards a Typology of Research Subjects with Regard to Digital Access and Literacy. International Journal of Qualitative Methods, 22. https://doi.org/10.1177/16094069231205188

Abstract. The Covid-19 pandemic has severely impacted empirical research practices relying on face-to-face interactions, such as interviews and group discussions. Confronted with pandemic management measures such as lockdowns, researchers at the height of the pandemic were widely limited to the use of online methods that did not enable direct contact with their research subjects. Even as the pandemic subsides, online data collection procedures are being widely applied, in many cases possibly recklessly. In this paper, we urge the implementation of a reflective approach to online research. In particular, we argue that both digital access and the research subjects’ digital literacy affect participation in online research and thus also the quality of the research. By combining these two dimensions, we develop a typology of four types of research subjects (digital outcasts, illiterates, sceptics and natives) that allows researchers to adapt their data collection to the specifics of each research situation. We illustrate these types in the context of our own research projects and discuss them with regard to three main challenges of empirical research, i.e., acquiring participants, establishing a basis for conversation, and maintaining ethical standards. We conclude by developing recommendations that help researchers to deal with these challenges in the context of online research to avoid unintended biases.

Harvey, O., van Teijlingen, E., & Parrish, M. (2023). Using a Range of Communication Tools to Interview a Hard-to-Reach Population. Sociological Research Online, 0(0). https://doi.org/10.1177/13607804221142212

Abstract. Online communication tools are increasingly being used by qualitative researchers; hence it is timely to reflect on the differences when using a broad range of data collection methods. Using a case study with a potentially hard-to-reach substance-using population who are often distrustful of researchers, this article explores the use of a variety of different platforms for interviews. It highlights both the advantages and disadvantages of each method. Face-to-face interviews and online videos offer more opportunity to build rapport, but lack anonymity. Live Webchat and audio-only interviews offer a high level of anonymity, but both may incur a loss of non-verbal communication, and in the Webchat a potential loss of personal narrative. This article is intended for sociologists who wish to broaden their methods for conducting research interviews.

Mackenzie, E., Berger, N., Holmes, K., & Walker, M. (2021). Online educational research with middle adolescent populations: Ethical considerations and recommendations. Research Ethics, 17(2), 217-227. https://doi.org/10.1177/1747016120963160

Abstract. Adolescent populations have become increasingly accessible through online data collection methods. Online surveys are advantageous in recruiting adolescent participants and can be designed for adolescents to provide informed consent without the requirement of parental consent. This study sampled 338 Australian adolescents to participate in a low risk online survey on adolescents’ experiences and perceptions of their learning in science classes, without parental consent. Adolescents were recruited through Facebook and Instagram advertising. In order to judge potential participants’ capacity to consent, two multiple-choice questions about the consent process were required to be answered correctly prior to accessing the survey. This simple strategy effectively determined whether middle adolescents had the capacity to provide informed consent to participate in low risk online educational research.

Schweinhart, A., Atwood, K., Aramburu, C., Bauer, R., Luseno, W., & Simons-Rudolph, A. (2023). Prioritizing Participant Safety During Online Focus Groups With Women Experiencing Violence. International Journal of Qualitative Methods, 22. https://doi.org/10.1177/16094069231216628

Abstract. Although researchers have convened focus groups to collect individuals’ opinions for some time, many have transitioned data collection from in-person to online groups. Online focus groups became even more important as COVID-19-related restrictions to movement and shared spaces interrupted the ability of researchers to collect data in person. However, little is published about conducting online groups with populations whose physical and emotional safety is at risk, such as those experiencing domestic violence. Working with these populations raises concerns related to participant safety, mental and emotional well-being, COVID-19 social distancing requirements, participant confidentiality, and data security. To address these concerns, we developed a set of protocols for conducting online focus groups with individuals whose experiences put them at high risk for emotional distress and social harm due to research participation. As part of a larger project with community-based partners, we sought more information on the impact of violence on mothers. IRB-approved online protocols were successfully implemented by researchers during two focus groups, each with five to six participants (mothers) who had experienced violence. Innovative strategies included identifying the safest virtual platform, having a licensed counselor and advocates on standby during the focus groups, developing trauma-informed distress and disclosure protocols, using technological safeguards to ensure confidentiality (e.g., assigning non-identifying nicknames in waiting rooms before the online focus group), providing onsite technical assistance, and co-locating participants in the same building while maintaining social distancing. The successful implementation of these methods suggests that it is possible to collect quality information on sensitive topics using a virtual format while maintaining confidentiality and using a trauma and resilience-informed approach. We hope that future data collection efforts with groups experiencing behavioral health risks related to research participation may benefit from sharing this methodology.

Paid Participants and Research Services

Companies that offer online survey platforms, such as Survey Monkey, also offer services that connect researchers with participants. Amazon’s Amazon Mechanical Turk, or MTurk, is another source of survey participants. As with any service, there are pros (timeliness and efficiency) and cons (designed for market, not academic research, and costs.) If you are considering a study with participants engaged through a third-party service, see this collection of articles explores the use of such approaches and the experience of participants.

Aguinis, H., Villamor, I., & Ramani, R. S. (2021). MTurk Research: Review and Recommendations. Journal of Management, 47(4), 823-837. https://doi.org/10.1177/0149206320969787

Abstract. The use of Amazon’s Mechanical Turk (MTurk) in management research has increased over 2,117% in recent years, from 6 papers in 2012 to 133 in 2019. Among scholars, though, there is a mixture of excitement about the practical and logistical benefits of using MTurk and skepticism about the validity of the data. Given that the practice is rapidly increasing but scholarly opinions diverge, the Journal of Management commissioned this review and consideration of best practices. We hope the recommendations provided here will serve as a catalyst for more robust, reproducible, and trustworthy MTurk-based research in management and related fields.

Hargittai, E., & Shaw, A. (2020). Comparing Internet Experiences and Prosociality in Amazon Mechanical Turk and Population-Based Survey Samples. Socius, 6. https://doi.org/10.1177/2378023119889834

Abstract. Given the high cost of traditional survey administration (postal mail, phone) and the limits of convenience samples such as university students, online samples offer a much welcomed alternative. Amazon Mechanical Turk (AMT) has been especially popular among academics for conducting surveys and experiments. Prior research has shown that AMT samples are not representative of the general population along some dimensions, but evidence suggests that these differences may not undermine the validity of AMT research. The authors revisit this comparison by analyzing responses to identical survey questions administered to both a U.S. national sample and AMT participants at the same time. The authors compare the two samples on sociodemographic factors, online experiences, and prosociality. The authors show that the two samples are different not just demographically but also regarding their online behaviors and standard survey measures of prosocial behaviors and attitudes. The authors discuss the implications of these findings for data collected on AMT.

Iles IA, Gaysynsky A, Sylvia Chou W-Y. Effects of Narrative Messages on Key COVID-19 Protective Responses: Findings From a Randomized Online Experiment. American Journal of Health Promotion. 2022;36(6):934-947. doi:10.1177/08901171221075612

Abstract. We investigated the effectiveness of narrative vs non-narrative messages in changing COVID-19-related perceptions and intentions. The study employed a between-subjects two-group (narratives vs non-narratives) experimental design and was administered online. 1804 U.S. adults recruited via Amazon MTurk in September 2020 were randomly assigned to one of two experimental conditions and read either three narrative or three non-narrative messages about social distancing, vaccination, and unproven treatments.

Loepp, E., & Kelly, J. T. (2020). Distinction without a difference? An assessment of MTurk Worker types. Research & Politics, 7(1). https://doi.org/10.1177/2053168019901185

Abstract. Amazon’s Mechanical Turk (MTurk) platform is a popular tool for scholars seeking a reasonably representative population to recruit subjects for academic research that is cheaper than contract work via survey research firms. Numerous scholarly inquiries affirm that the MTurk pool is at least as representative as college student samples; however, questions about the validity of MTurk data persist. Amazon classifies all MTurk Workers into two types: (1) “regular” Workers, and (2) more qualified (and expensive) “master” Workers. In this paper, we evaluate how choice in Worker type impacts the nature of research samples in terms of characteristics/features and performance. Our results identify few meaningful differences between master and regular Workers. However, we do find that master Workers are more likely to be female, older, and Republican, than regular Workers. Additionally, master Workers have far more experience, having spent twice as much time working on MTurk and having completed over seven times the number of assignments. Based on these findings, we recommend that researchers ask for Worker status and number of assignments completed to control for effects related to experience. However, the results imply that budget-conscious scholars will not compromise project integrity by using the wider pool of regular Workers in academic studies.

McKenzie, M. de J. (2024). Precarious Participants, Online Labour Platforms and the Academic Mode of Production: Examining Gigified Research Participation. Critical Sociology, 50(2), 241-254. https://doi.org/10.1177/08969205231180384

Abstract. In an economic environment defined by precarious and gig-based labour contracts, academic research has been reimagined as a source of income for research participants. In addition, with the rise of online labour platforms, researchers have turned to online labour platforms as a solution to the increasing difficulty in recruitment of participants in research. This present context makes explicit the hidden labour that research participants have always done in the production of research outputs within academia. This paper develops a Marxist lens through which we can understand the material conditions of the circulation of capital through academia and the role of research participants in this mode of production. By developing this broad analytical framework for the academic mode of production, this paper further argues that our present economic epoch of the gig economy and specifically the use of digital labour platforms for academic research, has accelerated the subsumption of research participation as a source of income through the fragmentation of work and the gigification of everyday life.

Moss AJ, Hauser DJ, Rosenzweig C, Jaffe S, Robinson J, Litman L. Using Market-Research Panels for Behavioral Science: An Overview and Tutorial. Advances in Methods and Practices in Psychological Science. 2023;6(2). doi:10.1177/25152459221140388

Abstract. Behavioral scientists looking to run online studies are confronted with a bevy of options. Where to recruit participants? Which tools to use for survey creation and study management? How to maintain data quality? In this tutorial, we highlight the unique capabilities of market-research panels and demonstrate how researchers can effectively sample from such panels. Unlike the microtask platforms most academics are familiar with (e.g., MTurk and Prolific), market-research panels have access to more than 100 million potential participants worldwide, provide more representative samples, and excel at demographic targeting. However, efficiently gathering data from online panels requires integration between the panel and a researcher’s survey in ways that are uncommon on microtask sites. For example, panels allow researchers to target participants according to preprofiled demographics (“Level 1” targeting, e.g., parents) and demographics that are not preprofiled but are screened for within the survey (“Level 2” targeting, e.g., parents of autistic children). In this article, we demonstrate how to sample hard-to-reach groups using market-research panels. We also describe several best practices for conducting research using online panels, including setting in-survey quotas to control sample composition and managing data quality. Our aim is to provide researchers with enough information to determine whether market-research panels are right for their research and to outline the necessary considerations for using such panels.

O’Brochta, W., & Parikh, S. (2021). Anomalous responses on Amazon Mechanical Turk: An Indian perspective. Research & Politics, 8(2). https://doi.org/10.1177/20531680211016971

Abstract. What can researchers do to address anomalous survey and experimental responses on Amazon Mechanical Turk (MTurk)? Much of the anomalous response problem has been traced to India, and several survey and technological techniques have been developed to detect foreign workers accessing US-specific surveys. We survey Indian MTurkers and find that 26% pass survey questions used to detect foreign workers, and 3% claim to be located in the United States. We show that restricting respondents to Master Workers and removing the US location requirement encourages Indian MTurkers to correctly self-report their location, helping to reduce anomalous responses among US respondents and to improve data quality. Based on these results, we outline key considerations for researchers seeking to maximize data quality while keeping costs low.

The wealth of material available online is irresistible to social researchers who are trying to understand contemporary experiences, perspectives, and events. The ethical collection and -use of such material is anything but straightforward. Find open-access articles that explore different approaches.